Take a look at the #HomeLab hashtag on twitter, and you will see lots of technology experts that have some pretty extensive lab environments at home – add me to that list. OK I’ve been on that list for a while…my lab has gone through numerous iterations:

- Started with Hyper-V initial release (2008 – KB950050)

- Ran StarWind Virtual SAN for a while – great performance offloading cache to RAM

- Moved away from local storage to SAN\NAS with FreeNAS

- LOTS of hardware iterations, before settling on SuperMicro and never looking back

- …that brings us to now

I had been running shared storage on FreeNAS for some time – both with NFS and iSCSI – on several 15K RPM drives and SSDs for ZIL\L2ARC with good results – performance was good, managment was easy, etc. But not everything was rosy. Storage space was limited as 15K drives are not exactly cheap – sure most of the data is ‘throw away’ because it’s a lab, but there were several times where I had less than 100GB free space and needed to stand up a few more machines for a demo. Oh, and 15K drives run HOT. And in the current configuration of the lab being in my office, that’s a big deal, so it was time to make some changes.

First, I was tired of paying a tax and using a lot of RAM in the lab for a management server that did not really provide any benefit – don’t get me wrong, it is not a bad product at all, I simply feel that for a lab, it may be a bit overkill and honestly, it’s time to change.

The biggest change was the change of my physical firewall to a virtual machine and the hardware being converted to a XenServer host with a local 500GB NVMe drive. This system is a Supermicro SYS-5018D-MF – with an E3-1220 V3 and limited to 32GB – I added 24GB as this box originally had just 8GB for the firewall.

The reasoning behind this box? Yes, it’s 1U with Jet-fans, but they are quiet when the CPU is below 25%. So whats running on this box? Virtual machines that don’t change – core infrastructure such as domain controllers for the house, firewall, and a few utility VMs. Thanks to the uber-fast SSD, all of these VMs are super fast. The other added bonus? Since this system is now self-contained, meaning no need for shared storage, AND it uses minimal power, I can shutdown the other host and storage system if I’m away and don’t need them.

So how did the conversion from VMware ESX to XenServer go? Great! Some notes:

- Use the XenServer Conversion Manager appliance (download from the XenServer page: https://www.citrix.com/downloads/xenserver/product-software/xenserver-65.html )

- There is one component to be installed in the target XenServer pool, and a management tool – 2 seperate downloads

- The source VM must be powered off to convert over

- Exporting to OVF works as well, but the Conversion Manager is far easier and more integrated

- For *nix workloads, you may need to boot into recovery mode to get things installed and\or use the BIOS boot order command

- xe vm-param-set HVM-boot-policy=”BIOS order” uuid=[uuid of your vm]

- I believe this is due to the templates in XenServer

- In XenServer 6.5, NVMe drives just work and they are fast = Win

Next, you’re probably wondering about the other bits in the picture, namely the two SSDs and 1TB HDDs that were not a part of the XenServer build. These are for the hardware that will be exclusively lab – meaning lots of storage, more CPU and RAM, and who cares if it dies. I’d also like the ability to use a mix of storage and add space if needed (yes, tiered local storage, essentially). And given the partnership between Nutanix and Citrix lately, my lab host has infact been converted to Nutanix Community Edition!

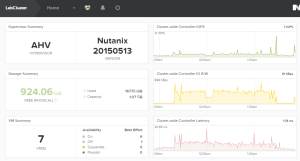

So it is just a single node, but it’s running the latest CE build of AHV with a few disks – both HDD and SSD. I really like the Image Service tool – give it an ISO file? no problem. VHD? Got it. VMDK? No worries. I also like that PXE boot is now enabled by default as it was not in previous builds of CE.

More details to come as I start using this day to day!

1 thought on “Upgrades to the Lab: Local SSD and Hypervisors”